If you’ve been following yesterday’s Spliced Audio/Video Styling Tutorial Spliced Media synchronized play project of recent times, you’ll probably guess what our “project word” would be, that being …

duration

… as a “measure” of importance to help with the sequential play of media, out of …

- audio

- video

- image

… choices of “media category” we’re offering in this project. But, what “duration” applies to image choice above? Well, we just hardcode 5 seconds for …

- (non-animated) JPEG or PNG or GIF … but …

- animated GIFs have a one cycle through duration …

… that we want to help calculate for the user and show in that relevant “end of” timing textbox. Luckily, we’ve researched this in the past, but every scenario is that bit different, we find, and so here is the Javascript for what we’re using …

function prefetch(whatgifmaybe) { // thanks to https://stackoverflow.com/questions/69564118/how-to-get-xxduration-of-gif-image-in-javascript#:~:text=Mainly%20use%20parseGIF()%20%2C%20then,xxduration%20of%20a%20GIF%20image.

if ((whatgifmaybe.toLowerCase().trim().split('#')[0].replace('/gif;', '/gif?;') + '?').indexOf('.gif?') != -1 && lastgifpreq != whatgifmaybe.split('?')[0]) {

lastgifpreq=whatgifmaybe.split('?')[0];

if (whatgifmaybe.indexOf('/tmp/') != -1) {

lastgifurl='/tmp/' + whatgifmaybe.split('/tmp/')[1];

} else {

lastgifurl='';

}

document.body.style.cursor='progress';

whatgifmaybe=whatgifmaybe.split('?')[0];

//alert('whatgifmaybe=' + whatgifmaybe);

fetch(whatgifmaybe)

.then(res => res.arrayBuffer())

.then(ab => isGifAnimated(new Uint8Array(ab)))

.then(console.log);

}

return -1;

}

/** @param {Uint8Array} uint8 */

function isGifAnimated(uint8) { // thanks to https://stackoverflow.com/questions/69564118/how-to-get-xxduration-of-gif-image-in-javascript#:~:text=Mainly%20use%20parseGIF()%20%2C%20then,xxduration%20of%20a%20GIF%20image.

let xxduration = 0;

for (let i = 0, len = uint8.length; i < len; i++) {

if (uint8[i] == 0x21

&& uint8[i + 1] == 0xF9

&& uint8[i + 2] == 0x04

&& uint8[i + 7] == 0x00) {

const xxdelay = (uint8[i + 5] << 8) | (uint8[i + 4] & 0xFF);

xxduration += xxdelay < 2 ? 10 : xxdelay;

}

}

//alert('' + eval(xxduration / 100));

if (eval(xxduration / 100) > 0.11) {

delay=eval(xxduration / 100);

if (('' + delay).indexOf('.') != -1) {

//document.getElementById('end' + lastgifid).type='text';

document.getElementById('end' + lastgifid).title='' + delay;

document.getElementById('end' + lastgifid).value='' + delay;

//alert(delay);

//setTimeout(function(){ alert('DeLaY=' + delay + ' lastgifid=' + lastgifid); }, 2000);

} else if (document.getElementById('end' + lastgifid)) {

document.getElementById('end' + lastgifid).title='' + delay;

document.getElementById('end' + lastgifid).value='' + delay;

if (document.getElementById('audio' + lastgifid).value.toLowerCase().indexOf('.gif') != -1 && document.getElementById('audio' + lastgifid).value.replace(/^\/tmp\//g,'data:').indexOf('data:') == -1 && document.getElementById('audio' + lastgifid).value.indexOf('#.') == -1) {

document.getElementById('audio' + lastgifid).value+='#.' + delay;

}

if (lastgifurl != '' && (176 == 176 || lastgifurl != lastgifurl)) { document.getElementById('audio' + lastgifid).value='' + lastgifurl; }

setTimeout(function(){ if (1 == 1) { document.getElementById('taif').src='/PHP/animegif/tutorial_to_animated_gif.php?aschild=' + Math.floor(Math.random() * 19897865); } else { window.open('/PHP/animegif/tutorial_to_animated_gif.php?aschild=' + Math.floor(Math.random() * 19897865),'taif'); } }, 2000);

}

}

document.body.style.cursor='pointer';

return eval(xxduration / 100); // if 0.1 is not an animated GIF

}

… in this project.

Today, we start concertinaing multiple image asks (and yet show image order), using our favourite “reveal” tool, the details/summary HTML element dynamic duo, so that there are less images disappearing “below the fold” happening this way in …

- the changed client_browsing.htm client side local file browsing HTML and Javascript inhouse helper … to help …

- unchanged PHP “guise of” our Splicing Audio or Video inhouse web application (mainly adding $_POST[] navigation knowledge to the HTML template to work off) … that being …

- our changed splice_audio.htm live run

Previous relevant Spliced Audio/Video Styling Tutorial is shown below.

We have “bad hair days”, but that doesn’t stop us seeking “styling days”. Yes, we often separate CSS styling into an issue that is addressed only if we deem the project warrants it, and we’ve decided this latest Spliced Audio/Video web application project is worth it. This means that further to yesterday’s Spliced Audio/Video Browsing Data URL Tutorial we have some new CSS styling in today’s work …

<style>

body {

background-color: #f9f9f9;

}

.animg {

width: 100%;

}

input[type=text] {

background-color: #e0e0e0;

border-radius: 7px;

margin-bottom: 2px;

}

input[type=number] {

background-color: #ebebeb;

border-radius: 7px;

}

input[type=submit] {

border-radius: 7px;

width: 20%;

}

#spif {

border-radius: 7px;

}

#taif {

border-radius: 7px;

}

input[type=checkbox] { /* thanks to https://stackoverflow.com/questions/24322599/why-cannot-change-checkbox-color-whatever-i-do */

border: 1px solid yellow;

filter: invert(80%) hue-rotate(88deg) brightness(1.7) opacity(0.8);

accent-color: cyan;

}

#audio1 {

width: 29% !important;

}

select {

background-color: lightblue;

border-radius: 7px;

}

</style>

Yes, no rocket science there. But, for the first time we can remember, we’ve turned a button …

-

<input onclick='fixhide(); fixstartend();' id='subis' style='text-align:center; background-color: pink; ' type='submit' value='Play Sequentially'></input>

… into (in the second phase of it’s existence within an execution run) a … - linear gradient inspired progress bar … the secret to the “hard stops” we got great new advice from this link, thanks … to come up with dynamic CSS styling via Javascript …

function dstyleit(what) {

var oney=what.split('yellow ')[1].split('%')[0] + '%,';

what=what.replace(oney,oney + oney);

document.getElementById('dstyle').innerHTML+='<style> #subis { background: ' + what + ' } </style>';

return what;

}

function everysecond() {

var addition=0.0;

segstart+=1.0;

if (segstart <= segend) {

addition=eval(1.0 * (eval(segstart / segend) * (eval(nextpercent - basepercent))));

if (segstart < segend) {

setTimeout(everysecond, 1000);

}

document.getElementById('subis').style.background=dstyleit('linear-gradient(to right, yellow ' + Math.ceil(eval(basepercent + addition)) + '%, pink ' + Math.floor(eval(100 - eval(basepercent + addition))) + '%) !important');

} else {

addition=eval(nextpercent - basepercent);

document.getElementById('subis').style.background=dstyleit('linear-gradient(to right, yellow ' + Math.ceil(eval(basepercent + addition)) + '%, pink ' + Math.floor(eval(100 - eval(basepercent + addition))) + '%) !important');

segstart=0.0;

}

}

function seqe() {

var suff=' of 1', lzero=0;

if (document.getElementById('end' + eval(1 + zero)) && (spareanvideo != '' || spareanimg != '' || spareanaudio != '' || spareelse != '' || zero > 0)) {

while (audiofiles[eval(0 + lzero)].trim() != '') {

lzero++;

}

suff=' of ' + lzero;

basepercent=eval(100 * (eval(0 + zero) / lzero));

nextpercent=eval(100 * (eval(1 + zero) / lzero));

if (audiofiles[eval(0 + zero)].indexOf('data:audio/') == 0) {

isaudio=true;

document.getElementById('subis').style.background=dstyleit('linear-gradient(to right, yellow ' + Math.ceil(eval(100 * (eval(0 + zero) / lzero))) + '%, pink ' + Math.floor(eval(100 - eval(100 * (eval(0 + zero) / lzero)))) + '%) !important');

document.getElementById('subis').value='Currently Playing Audio ' + eval(1 + zero) + suff; // + ': ' + audiofiles[eval(0 + zero)].replace(/\ /g,'+'); // + " via Google Translate if you press speaker and am waiting for you to close that window.";

//var xc=prompt(divanaudio.replace('<source ', '<source type=audio/' + audiofiles[eval(0 + zero)].split('data:audio/')[1].split(',')[0].split(';')[0] + ' ').replace(' src=""', ' src="' + audiofiles[eval(0 + zero)].replace(/\ /g,'+') + '"').replace('none','BLOCK'),divanaudio.replace('<source ', '<source type=audio/' + audiofiles[eval(0 + zero)].split('data:audio/')[1].split(',')[0].split(';')[0] + ' ').replace(' src=""', ' src="' + audiofiles[eval(0 + zero)].replace(/\ /g,'+') + '"').replace('none','BLOCK'));

document.getElementById('divanaudio').style.width='30%';

document.getElementById('divanaudio').style.marginLeft='35%';

document.getElementById('divanaudio').innerHTML=divanaudio.replace('>',' controls>').replace(' title=""', ' title="Play"').replace('<source ', '<source type=audio/' + audiofiles[eval(0 + zero)].split('data:audio/')[1].split(',')[0].split(';')[0] + ' ').replace(' src=""', ' src="' + audiofiles[eval(0 + zero)].replace(/\ /g,'+') + '"').replace('none','BLOCK');

if (document.getElementById('end' + eval(1 + zero)).value.replace(/^0/g,'-').indexOf('-') == -1) {

segstart=0.0;

segend=eval('' + document.getElementById('end' + eval(1 + zero)).value) + 4 - eval('' + document.getElementById('start' + eval(1 + zero)).value);

setTimeout(everysecond, 1000);

setTimeout(function(){ zero++; seqe(); }, eval(1000 * (eval('' + document.getElementById('end' + eval(1 + zero)).value) + 4 - eval('' + document.getElementById('start' + eval(1 + zero)).value))));

}

} else if (audiofiles[eval(0 + zero)].indexOf('data:video/') == 0) {

isaudio=false;

document.getElementById('subis').style.background=dstyleit('linear-gradient(to right, yellow ' + Math.ceil(eval(100 * (eval(0 + zero) / lzero))) + '%, pink ' + Math.floor(eval(100 - eval(100 * (eval(0 + zero) / lzero)))) + '%) !important');

document.getElementById('subis').value='Currently Playing Video ' + eval(1 + zero) + suff; // + ': ' + audiofiles[eval(0 + zero)].replace(/\ /g,'+'); // + " via Google Translate if you press speaker and am waiting for you to close that window.";

document.getElementById('divanvideo').style.width='100%';

document.getElementById('anvideo').style.width='60%';

document.getElementById('anvideo').style.marginLeft='20%';

document.getElementById('divanvideo').innerHTML=divanvideo.replace('>',' controls>').replace(' title=""', ' title="Play"').replace('<source ', '<source type=video/' + audiofiles[eval(0 + zero)].split('data:video/')[1].split(',')[0].split(';')[0].replace('quicktime','mp4') + ' ').replace(' src=""', ' src="' + audiofiles[eval(0 + zero)].replace(/\ /g,'+') + '"').replace('none','BLOCK');

if (document.getElementById('end' + eval(1 + zero)).value.replace(/^0/g,'-').indexOf('-') == -1) {

setTimeout(everysecond, 1000);

setTimeout(function(){ zero++; seqe(); }, eval(1000 * (eval('' + document.getElementById('end' + eval(1 + zero)).value) + 4 - eval('' + document.getElementById('start' + eval(1 + zero)).value))));

}

} else if (audiofiles[eval(0 + zero)].indexOf('data:image/') == 0) {

isaudio=false;

document.getElementById('subis').style.background=dstyleit('linear-gradient(to right, yellow ' + Math.ceil(eval(100 * (eval(0 + zero) / lzero))) + '%, pink ' + Math.floor(eval(100 - eval(100 * (eval(0 + zero) / lzero)))) + '%) !important');

document.getElementById('subis').value='Currently Showing Image ' + eval(1 + zero) + suff; // + ': ' + audiofiles[eval(0 + zero)].replace(/\ /g,'+'); // + " via Google Translate if you press speaker and am waiting for you to close that window.";

document.getElementById('divanimg').style.width='100%';

document.getElementById('divanimg').style.textAlign='center';

//alert(divanimg.replace(' title=""', ' title="Play"').replace(' src=""', ' src="' + audiofiles[eval(0 + zero)].replace(/\ /g,'+') + '"').replace('none','BLOCK'));

if (document.getElementById('animg').src == '') {

document.getElementById('divanimg').innerHTML=divanimg.replace(' title=""', ' title="Play"').replace(' src=""', ' src="' + audiofiles[eval(0 + zero)].replace(/\ /g,'+') + '"').replace('none','BLOCK');

} else if (document.getElementById('divanimg').innerHTML.indexOf(audiofiles[eval(0 + zero)].replace(/\ /g,'+')) == -1) {

document.getElementById('divanimg').innerHTML+=('<br><img' + divanimg.split('<img')[1].split('</img>')[0] + '</img>').replace(' id=', ' data-id=').replace(' title=""', ' title="Play"').replace(' src=""', ' src="' + audiofiles[eval(0 + zero)].replace(/\ /g,'+') + '"').replace('none','BLOCK');

}

if (document.getElementById('end' + eval(1 + zero)).value.replace(/^0/g,'-').indexOf('-') == -1) {

segstart=0.0;

segend=eval('' + document.getElementById('end' + eval(1 + zero)).value) + 4 - eval('' + document.getElementById('start' + eval(1 + zero)).value);

setTimeout(everysecond, 1000);

setTimeout(function(){ zero++; seqe(); }, eval(1000 * (eval('' + document.getElementById('end' + eval(1 + zero)).value) + 4 - eval('' + document.getElementById('start' + eval(1 + zero)).value))));

}

} else if (audiofiles[eval(0 + zero)] != '') {

isaudio=true;

document.getElementById('subis').style.background=dstyleit('linear-gradient(to right, yellow ' + Math.ceil(eval(100 * (eval(0 + zero) / lzero))) + '%, pink ' + Math.floor(eval(100 - eval(100 * (eval(0 + zero) / lzero)))) + '%) !important');

document.getElementById('subis').value='Currently Playing Audio ' + eval(1 + zero) + ': ' + noplus(audiofiles[eval(0 + zero)]) + " via Google Translate if you press speaker and am waiting for you to close that window " + suff;

wo=window.open('https://translate.google.com/#auto/en/' + encodeURIComponent(noplus(audiofiles[eval(0 + zero)])),'_blank','top=50,left=50,width=600,height=600');

}

setTimeout(precheckget, 20);

}

}

… so as to be happy that our button behaved a lot like a progress bar in our changed splice_audio.htm live run …

… as something we’ve long dreamed of … well, you had to be there!

Previous relevant Spliced Audio/Video Browsing Data URL Tutorial is shown below.

It’s data URL media conduits that make …

- browsing for local files off the client operating system environment … and …

- a genericized “guise” of web server media files … and …

- client File API blob or canvas content media representations

… a point of commonality, and as far as we are concerned, we are always looking to these days, whenever we can, data size permitting, to do away with static web server references, open to so many mixed content and privilege issues.

Perhaps, that is the major reason, these days, we’ve taken more and more to hashtag based means of communication via “a” “mailto:” (email) or “sms:” (SMS) links.

Today proved to us that that, albeit most flexible of all, clientside hashtag approach has its data limitations, that PHP serverside form method=POST does better at, as far as accomodating large amounts of data.

And so, yesterday, with our Splicing Audio or Video inhouse web application, turning more towards data URLs to solve issues, we started the day …

- trying hashtag clientside based navigations … but ran into data size issues, so …

- introduced into our Splicing Audio or Video inhouse web application, for the first time, PHP serverside involvement …

… that made things start working for us better getting the synchronized play of our user entered audio or video media items performing better.

And so, further to yesterday’s Spliced Audio/Video Data URL Tutorial we added browsing for local media files as a new option for user input, by calling …

- our changed tutorial_to_animated_gif.php PHP animated GIF creator helper web application … hosted in a new iframe element to help collect local file media file(s) … itself calling …

- the changed client_browsing.htm client side local file browsing HTML and Javascript inhouse helper … to help …

- totally new “first draft” PHP “guise of” our Splicing Audio or Video inhouse web application (mainly adding $_POST[] navigation knowledge to the HTML template to work off) … that being …

- our changed splice_audio.htm live run

Previous relevant Spliced Audio/Video Data URL Tutorial is shown below.

We’re revisiting the Spliced Media web application talked about at Spliced Audio/Video Overlay Position Tutorial to shore up some input conduits …

- entering a File as a relative URL to the RJM Programming domain document root …

- entering a File as a fully formed audio or video data URL

… and there is still browsing for local files we’d like to add to that list, but on another day.

We started considering this work to help the user to fill out a real media duration value that can help with the synchronized playing of the media.

We found that the onloadedmetadata statically defined …

<div id=divanaudio><audio id='anaudio' onload=alere(event); onloadedmetadata=ahere(event); style=display:none;><source id=sanaudio src=""></source></audio></div>

<div id=divanvideo><video id='anvideo' onload=vlere(event); onloadedmetadata=vhere(event); style=display:none;><source id=sanvideo src=""></source></video></div>

<iframe name=myif onload='checkdu(this);' id=myif src=/HTMLCSS/do_away_with_the_boring_bits.html style=display:none;></iframe>

<form id=myifform method=POST style=display:none; target=myif action=/HTMLCSS/do_away_with_the_boring_bits.php>

<input type=hidden name=url id=myifurl value=''></input><input type=hidden name=durto id=myifdurto value=''></input><input type=hidden name=justfgcdu id=myifjustfgcdu value=''></input><input type=submit id=myifb value=Submit style=display:none;></input></form>

… in an audio or video element hosted by a reworked div hosting element was the way to go …

function doinput(sio) {

var ij=0;

if (sio.value.indexOf('data:audio/') == 0) {

if (sio.value.indexOf(';base64,') != -1) {

durtoid='end' + sio.id.replace('audio','');

document.getElementById('sel' + sio.id.replace('audio','')).value='Audio';

document.getElementById('divanaudio').innerHTML=divanaudio.replace('>source ', '<source type=audio/' + sio.value.split('data:audio/')[1].split(',')[0].split(';')[0] + ' ').replace(' src=""', ' src="' + sio.value + '"').replace('none','NONE');

document.getElementById('myif').src='/HTMLCSS/do_away_with_the_boring_bits.php?rand=' + encodeURIComponent(sio.value.split(';base64,')[0] + ';base64,');

document.getElementById('myifurl').value=sio.value;

document.getElementById('myifdurto').value=durtoid;

document.getElementById('myifjustfgcdu').value=sio.value.split(';base64,')[0] + ';base64,';

setTimeout(function(){

document.getElementById('myifb').click();

}, 2000);

}

} else if (sio.value.indexOf('data:video/') == 0) {

if (sio.value.indexOf(';base64,') != -1) {

durtoid='end' + sio.id.replace('audio','');

document.getElementById('sel' + sio.id.replace('audio','')).value='Video';

document.getElementById('divanvideo').innerHTML=divanaudio.replace('<source ', '<source type=video/' + sio.value.split('data:video/')[1].split(',')[0].split(';')[0] + ' ').replace(' src=""', ' src="' + sio.value + '"').replace('none','NONE');

document.getElementById('myif').src='/HTMLCSS/do_away_with_the_boring_bits.php?rand=' + encodeURIComponent(sio.value.split(';base64,')[0] + ';base64,');

document.getElementById('myifurl').value=sio.value;

document.getElementById('myifdurto').value=durtoid;

document.getElementById('myifjustfgcdu').value=sio.value.split(';base64,')[0] + ';base64,';

setTimeout(function(){

document.getElementById('myifb').click();

}, 2000);

}

} else if (sio.value.indexOf('data:image/') == 0) {

durtoid='end' + sio.id.replace('audio','');

} else if ((' ' + sio.value.replace(/\.$/g,'')).slice(-5).indexOf('.') != -1) {

for (ij=0; ij<mexts.length; ij++) {

if ((sio.value + '~').indexOf(mexts[ij] + '~') != -1) {

durtoid='end' + sio.id.replace('audio','');

document.getElementById('sel' + sio.id.replace('audio','')).value=mtypes[ij].split('/')[0].substring(0,1).toUpperCase() + mtypes[ij].split('/')[0].substring(1).toLowerCase();

switch (mtypes[ij].split('/')[0]) {

case "audio":

document.getElementById('myif').src='/HTMLCSS/do_away_with_the_boring_bits.php?url=' + encodeURIComponent(sio.value) + '&durto=end' + sio.id.replace('audio','') + '&justfgcdu=' + encodeURIComponent('data:' + mtypes[ij] + ';base64,');

break;

case "video":

document.getElementById('myif').src='/HTMLCSS/do_away_with_the_boring_bits.php?url=' + encodeURIComponent(sio.value) + '&durto=end' + sio.id.replace('audio','') + '&justfgcdu=' + encodeURIComponent('data:' + mtypes[ij] + ';base64,');

break;

default:

break;

}

}

}

}

audiofiles[eval(-1 + eval(sio.id.replace('audio','')))]=sio.value;

}

function checkdu(iois) {

if (iois.src.indexOf('/do_away_with_the_boring_bits.') != -1 && iois.src.indexOf('/do_away_with_the_boring_bits.htm') == -1) {

var twaconto = (iois.contentWindow || iois.contentDocument);

if (twaconto != null) {

if (twaconto.document) { twaconto = twaconto.document; }

if (twaconto.body.innerHTML.replace(/^\/tmp/g, 'data:audio').indexOf('data:audio/') != -1) {

//alert(twaconto.body.innerHTML);

//document.getElementById('sanaudio').src=twaconto.body.innerHTML;

//alert(divanaudio + ' ... ' + twaconto.body.innerHTML);

if (twaconto.body.innerHTML.indexOf('data:audio/') != -1) {

document.getElementById('divanaudio').innerHTML=divanaudio.replace('<source ', '<source type=audio/' + twaconto.body.innerHTML.split('data:audio/')[1].split(',')[0].split(';')[0] + ' ').replace(' src=""', ' src="' + twaconto.body.innerHTML + '"').replace('none','NONE');

} else {

var pref=1;

if (document.getElementById('audio' + pref).value.indexOf('data:') == -1) {

while (document.getElementById('audio' + pref).value.indexOf('data:') == -1) {

pref++;

}

}

while (document.getElementById('audio' + pref).value.indexOf('data:') == -1) {

pref++;

}

document.getElementById('audio' + pref).value=twaconto.body.innerHTML;

iois.src='/HTMLCSS/do_away_with_the_boring_bits.php?rand=';

}

//alert(document.getElementById('divanaudio').innerHTML);

} else if (twaconto.body.innerHTML.indexOf('data:video/') != -1) {

//document.getElementById('sanvideo').src=twaconto.body.innerHTML;

//alert(divanvideo + ' ... ' + twaconto.body.innerHTML);

document.getElementById('divanvideo').innerHTML=divanvideo.replace('<source ', '<source type=video/' + twaconto.body.innerHTML.split('data:video/')[1].split(',')[0].split(';')[0] + ' ').replace(' src=""', ' src="' + twaconto.body.innerHTML + '"').replace('none','NONE');

//alert(document.getElementById('divanvideo').innerHTML);

}

//alert(twaconto.body.innerHTML);

}

}

}

function ahere(evt) {

if (document.getElementById('sanaudio').src.trim() != '') {

document.getElementById(durtoid).value=Math.ceil(eval('' + document.getElementById('anaudio').duration));

//alert('audio here');

//alert('audio here ' + document.getElementById('anaudio').duration);

}

}

function alere(evt) {

if (document.getElementById('sanaudio').src.trim() != '') {

document.getElementById(durtoid).value=Math.ceil(eval('' + document.getElementById('anaudio').duration));

//alert('audio Here');

}

}

function vhere(evt) {

if (document.getElementById('sanvideo').src.trim() != '') {

document.getElementById(durtoid).value=Math.ceil(eval('' + document.getElementById('anvideo').duration));

//alert('video here');

//alert('video here ' + document.getElementById('anvideo').duration);

}

}

function vlere(evt) {

if (document.getElementById('sanvideo').src.trim() != '') {

document.getElementById(durtoid).value=Math.ceil(eval('' + document.getElementById('anvideo').duration));

//alert('video Here');

}

}

This works if you can fill in the src attribute of the relevant subelement source element with a suitable data URL (we used the changed do_away_with_the_boring_bits.php helping PHP to derive). From there, in that event logic an [element].duration is there to help fill out those end of play textboxes in a more automated fashion for the user that wants to use this new functionality, as they fill out the Spliced Media form presented.

Previous relevant Spliced Audio/Video Overlay Position Tutorial is shown below.

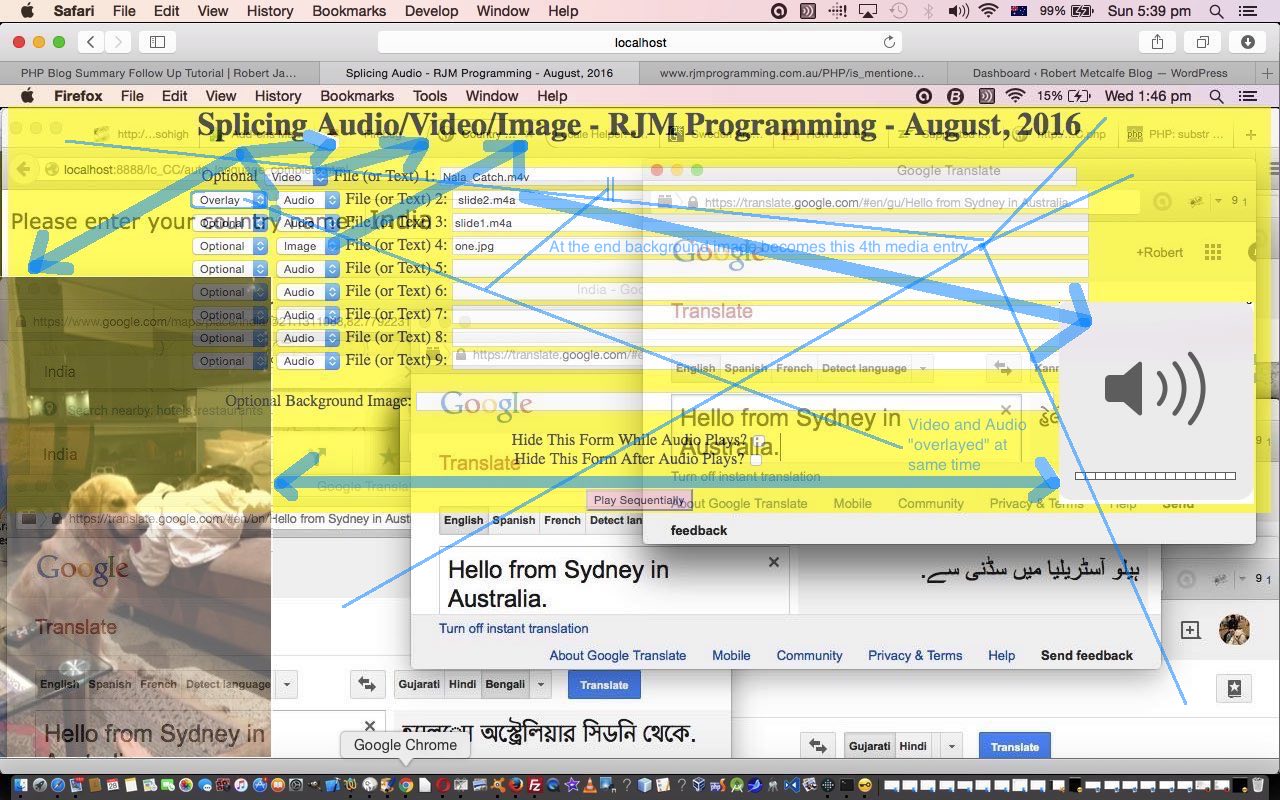

Today we’ve written a third draft of an HTML and Javascript web application that splices up to nine bits of audio or video or image input together, building on the previous Spliced Audio/Video/Image Overlay Tutorial as shown below, here, and that can take any of the forms …

- audio file … and less user friendly is …

- text that gets turned into speech via Google Translate (and user induced Text to Speech functionality), but needs your button presses

- video

- image … and background image for webpage

… for either of the modes of use, that being …

- discrete … or “Optional”

- synchronized … or “Overlay”

… all like yesterday, but this time we allow you to “seek” or position yourself within the audio and/or video media. We still all “fit” this into GET parameter usage. Are you thinking we are a tad lazy with this approach? Well, perhaps a little, but it also means you can do this job just using clientside HTML and Javascript, without having to involve any serverside code like PHP, and in this day and age, people are much keener on this “just clientside” or “just client looking, plus, perhaps, Javascript serverside code” (ala Node.js) or perhaps “Javascript clientside client code, plus Ajax methodologies”. In any case, it does simplify design to not have to involve a serverside language like PHP … but please don’t think we do not encourage you to learn a serverside language like PHP.

While we are at it here, we continue to think about the mobile device unfriendliness with our current web application, it being, these days, that the setting of the autoplay property for a media object is frowned upon regarding these mobile devices … for reasons of “runaway” unknown charge issues as you can read at this useful link … thanks … and where they quote from Apple …

“Apple has made the decision to disable the automatic playing of video on iOS devices, through both script and attribute implementations.

In Safari, on iOS (for all devices, including iPad), where the user may be on a cellular network and be charged per data unit, preload and auto-play are disabled. No data is loaded until the user initiates it.” – Apple documentation.

A link we’d like to thank regarding the new “seek” or media positioning functionality is this one … thanks.

Also, today, for that sense of symmetry, we start to create the Audio objects from now on using …

document.createElement("AUDIO");

… as this acts the same as new Audio() to the best of our testing.

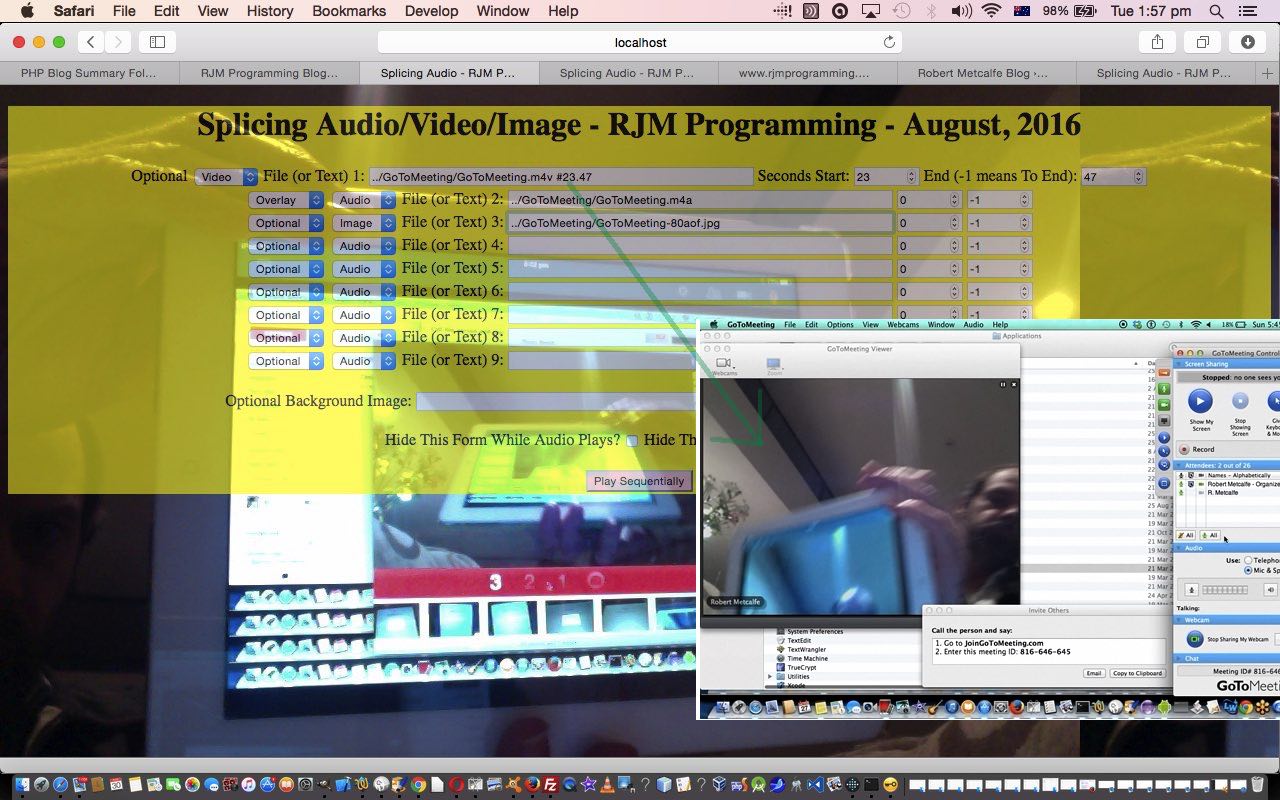

For your own testing purposes, if you know of some media URLs to try, please feel free to try the “overlay” of media ideas inherent in today’s splice_audio.htm live run. For today’s cake “prepared before the program” we’ve again channelled the GoToMeeting Primer Tutorial which had separate audio (albeit very short … sorry … but you get the gist) and video … well, below, you can click on the image to hear the presentation with audio and video synchronized, but only seconds 23 through to 47 of the video should play, and the presentation ending with the image below …

We think, though, that we will be back regarding this interesting topic, and hope we can improve mobile device functionality.

Previous relevant Spliced Audio/Video/Image Overlay Tutorial is shown below.

Today we’ve written a second draft of an HTML and Javascript web application that splices up to nine bits of audio or video or image input together, building on the previous Splicing Audio Primer Tutorial as shown below, here, and that can take any of the forms …

- audio file … and less user friendly is …

- text that gets turned into speech via Google Translate (and user induced Text to Speech functionality), but needs your button presses

- video

- image … and background image for webpage

… for either of the modes of use, that being …

- discrete … or “Optional”

- synchronized … or “Overlay”

The major new change here, apart from the ability to play two media files at once in our synchronized (or “overlayed”) way, is the additional functionality for Video, and we proceeded thinking there’d be an Javascript DOM OOPy method like … var xv = new Video(); … to allow for this, but found out from this useful link … thanks … that an alternative approach for Video object creation, on the fly, is …

var xv = document.createElement("VIDEO");

… curiously. And it took us a while to tweak to the idea that to have a “display home” for the video on the webpage we needed to …

document.body.appendChild(xv);

… which means you need to take care of any HTML form data already filled in, that isn’t that form’s default, when you effectively “refresh” the webpage like this. Essentially though, media on the fly is a modern approach possible fairly easily with just clientside code. Cute, huh?!

Of course, what we still miss here, is the upload from a local place onto the web server, here at RJM Programming, capability, which we may consider in future, and that some of those other synchronization of media themed blog postings of the past, which you may want to read more, for this type of approach.

In the meantime, if you know of some media URLs to try, please feel free to try the “overlay” of media ideas inherent in today’s splice_audio.htm live run. We’ve thought of this one. Do you remember how the GoToMeeting Primer Tutorial had separate audio (albeit very short … sorry … but you get the gist) and video … well, below, you can click on the image to hear the presentation with audio and video synchronized, and the presentation ending with the image below …

We think, though, that we will be back regarding this interesting topic.

Previous relevant Splicing Audio Primer Tutorial is shown below.

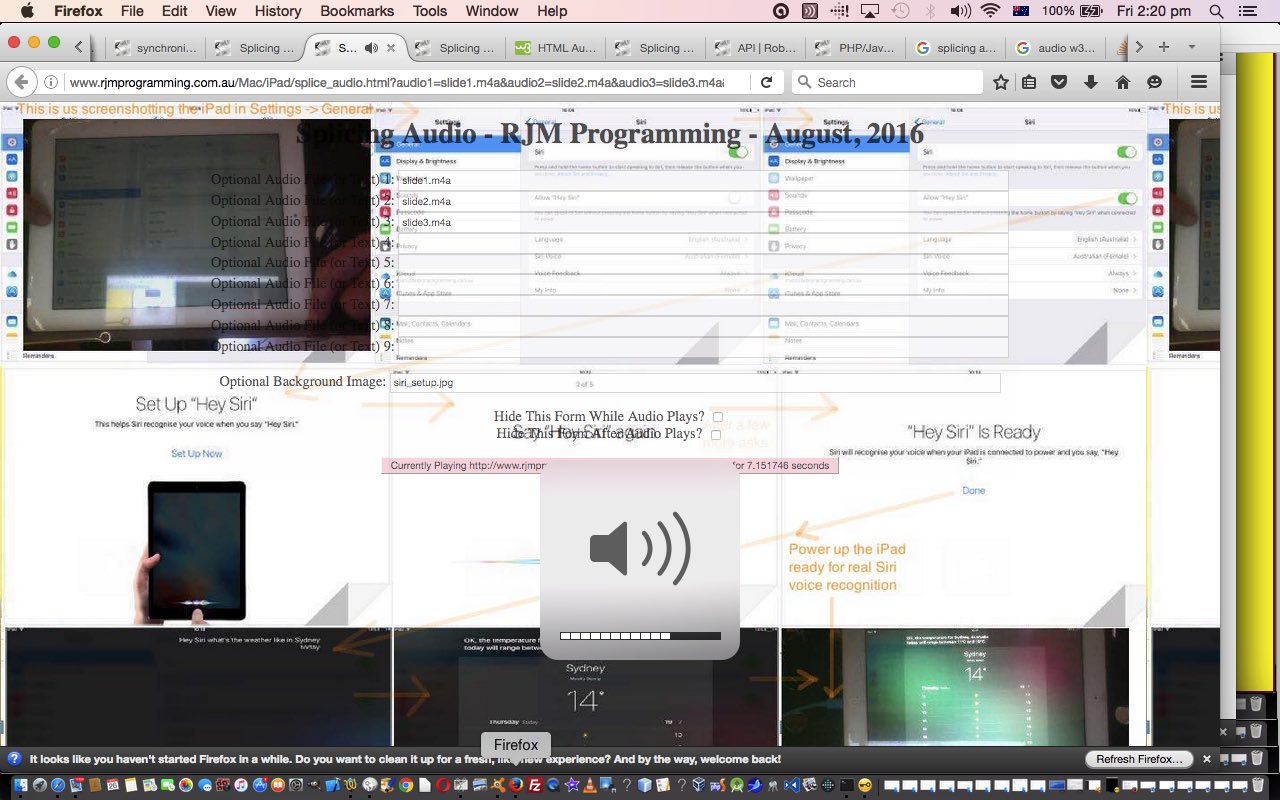

Today we’ve written a first draft of an HTML and Javascript web application that splices up to nine bits of audio input together that can take either of the forms …

- audio file … and less user friendly is …

- text that gets turned into speech via Google Translate (and user induced Text to Speech functionality), but needs your button presses

Do you remember, perhaps, when we did a series of blog posts regarding the YouTube API, that finished, so far, with YouTube API Iframe Synchronicity Resizing Tutorial? Well, a lot of what we do today is doing similar sorts of functionalities but just for Audio objects in HTML5. For help on this we’d like to thank this great link. So rather than have HTML audio elements in our HTML, as we first shaped to do, we’ve taken the great advice from this link, and gone all Javascript DOM OOPy on the task, to splice audio media together.

There were three thought patterns going on here for me.

- The first was a simulation of those Sydney train public announcements where the timbre of the voice differs a bit between when they say “Platform” and the “6” (or whatever platform it is) that follows. This is pretty obviously computer audio “bits” strung together … and wanted to get somewhere towards that capability.

- The second one relates to presentation ideas following up on that “onmouseover” Siri audio enhanced presentation we did at Apple iOS Siri Audio Commentary Tutorial. Well, we think we can do something related to that here, and we’ve prepared this

cakeaudio presentation here, for us, in advance … really, there’s no need for thanks. - The third concerns our eternal media file synchronization quests here at this blog that you may find of interest we hope, here.

Also of interest over time has been the Google Translate Text to Speech functionality that used to be very open, and we now only use around here in an interactive “user clicks” way … but we still use it, because it is very useful, so, thanks. But trying to get this method working for “Platform” and “6” without a yawning gap in between ruins the spontaneity and fun somehow, but there’s nothing stopping you making your own audio files yourself as we did in that Siri tutorial called Apple iOS Siri Audio Commentary Tutorial and take the HTML and Javascript code you could call splice_audio.html from today, and go and make your own web application? Now, is there? Huh?

Try a live run or perhaps some more Siri cakes?!

- Audio with Background then Form for another, perhaps

- Audio with Background and Form showing the whole time

- Audio with no Background then Form for another, perhaps

- Just Audio

If this was interesting you may be interested in this too.

If this was interesting you may be interested in this too.

If this was interesting you may be interested in this too.

If this was interesting you may be interested in this too.

If this was interesting you may be interested in this too.

If this was interesting you may be interested in this too.

If this was interesting you may be interested in this too.