The Linux (or unix) command cURL is a powerful command line tool, that, if installed, may help you garner data by means of data scraping (or screen scraping, or, as in our case, web scraping) from the web, within the Linux command line environment.

You link this to batch processing ideas and a lot of programmer mouth watering will result … sometimes not a pretty sight … and it is better to have the watermelon at the ready immediately, though even there might be some ettiquette rules that don’t cover the seeds … am sure Demtel sell a seed remover … but one digresses. Unfortunately, this will just have to be one of those graphs called “para” … chortle, chortle.

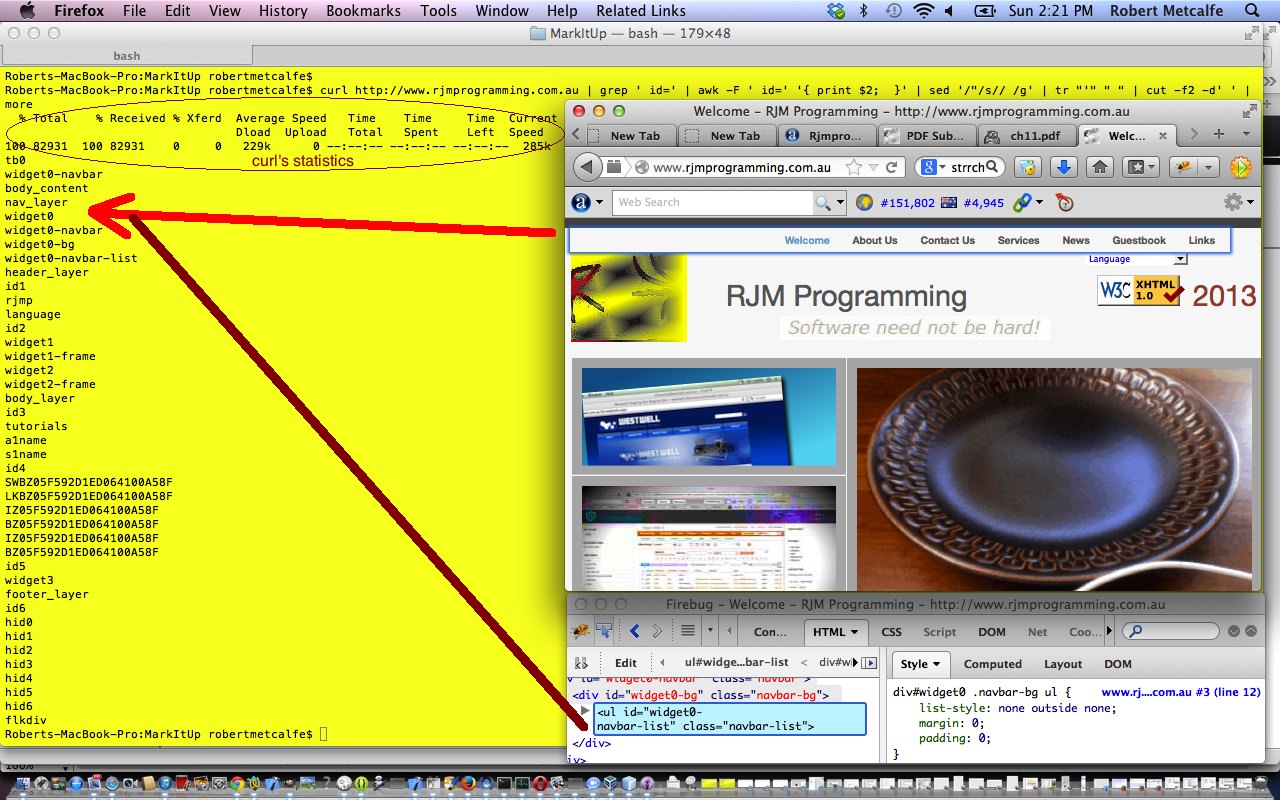

Link to some programming Mac Terminal Bash script file you could call curl_as_a_cucumber.sh as you see fit, to try what the tutorial does (which is to extract the HTML element id= values from a webpage), or if you need advice on installing cURL … try this.

Background reading could be:

If this was interesting you may be interested in this too.